Research finds greater societal awareness needed to protect our privacy and data from AI when we die

A research study has suggested that greater societal awareness of ‘ghostbots’ and a ‘Do not bot me’ clause in wills and other contracts could prevent us from being digitally reincarnated without our permission when we die.

The study was carried out by researchers at Queen's, Aston Law School and Newcastle University Law School.

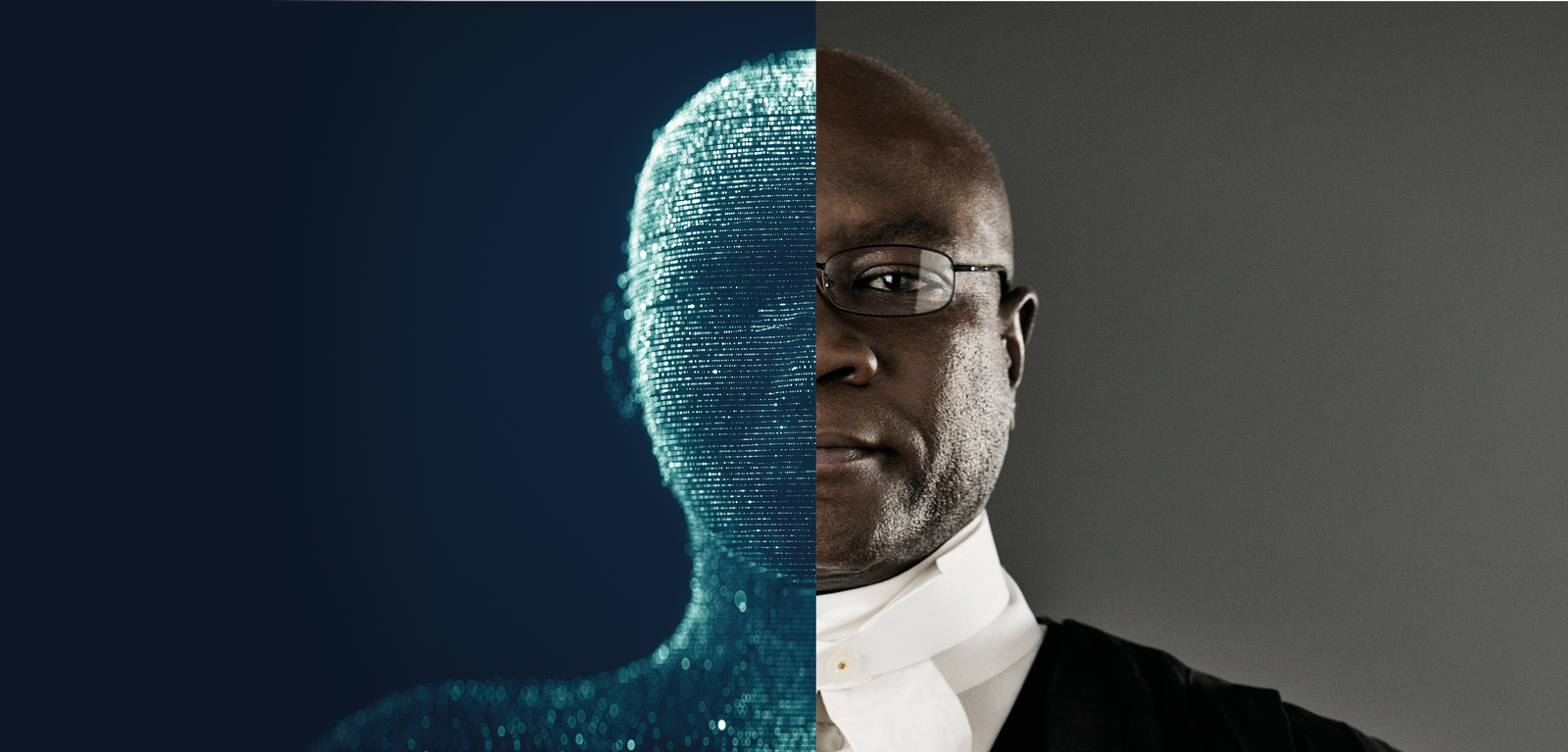

‘Ghostbots’ is a term used to describe the novel phenomenon of using artificial intelligence (AI) to create digital reincarnations of the dead. This can include deepfakes, replicas, holographs, or chatbots that attempt to recreate the appearance, voice and/or personality of dead people.

Recent examples of celebrity ‘ghostbots’ that have piqued media attention include a hologram of Tupac Shakur performing at Coachella in 2012, and a hologram of the late Robert Kardashian created using deepfake technology gifted to Kim Kardashian by Kanye West in 2020.

The paper ‘Governing Ghostbots’ was recently published in the Computer Law & Security Review 2023, and the research carried out by Dr Marisa McVey from the School of Law at Queen’s University Belfast, Dr Edina Harbinja from Aston Law School, and Professor Lilian Edwards, from Newcastle University Law School.

With much of our lives on social media platforms, data collected from these sites could also be used to emulate how we talk, how we act and how we look, even after we are long gone. Due to the increasing commercialisation of deepfake technology aimed at digital reincarnation, the research looked at ways to protect our privacy (including post-mortem privacy), property, personal data, and reputation.

Speaking about the study, Dr McVey said: “‘Ghostbots’ lie at the intersection of many different areas of law, such as privacy and property, and yet there remains a lack of protection for the deceased’s personality, privacy, or dignity after death. Furthermore, in the UK, privacy and data protection laws do not extend to heirs after death.

“While it is not thought that ‘ghostbots’ could cause physical harm, the likelihood is that they could cause emotional distress and economic harm, particularly impacting upon the deceased’s loved ones and heirs.

“Currently, in the absence of specific legislation in the UK and further afield, it’s unclear who might have the power to bring back our digital persona after we die.”

Recent legal developments in the US and EU have shone a light on the potential for further regulation of ‘ghostbots’. In the state of New York, there is now a right of publicity for celebrities to control the commercial use of their name, image and likeness which extends to forty years post-mortem. There is also a further prohibition on the digital replication of deceased performers. In Europe, the proposed EU AI Act also requires greater transparency for deepfakes and chatbots, which appears to cover ‘ghostbots’.

Dr McVey added: “In the absence of legislation in the UK, one way to protect our post-mortem selves might be through the drafting of a legally binding ‘Do not bot me’ clause that could be inserted into wills and other contracts while people are still alive, This, combined with a global searchable database of such requests, may prove a useful solution to some of the concerns raised by ‘ghostbots’.

“We also suggest that in addition to legal protections, greater societal awareness of the phenomenon of ‘ghostbots’, education on digital legacies and cohesive protection across different jurisdictions is crucial to ensure that this does not happen without our permission.”

The research is a part of the Leverhulme Trust funded project “Modern Technologies, Privacy Law and the Dead”

The paper ‘Governing Ghostbots’ in the Computer, Law & Security Review 2023 is available here: https://www.sciencedirect.com/science/article/pii/S026736492300002X

Media

Media enquiries to Zara McBrearty at Queen’s Communications Office on email: z.mcbrearty@qub.ac.uk